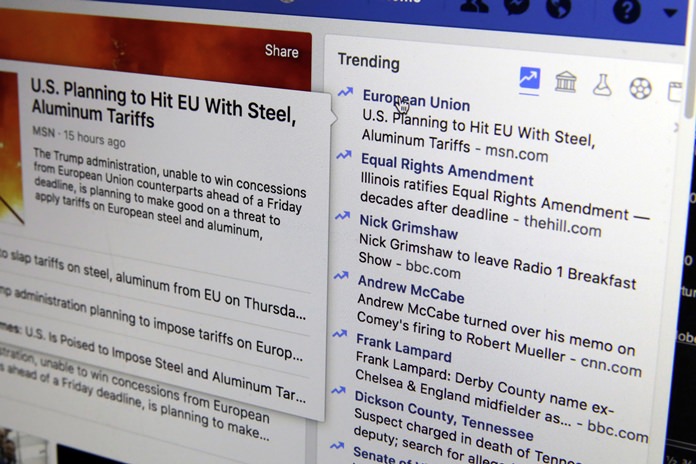

New York (AP) – Facebook is shutting down its ill-fated “trending” news section after four years, a company executive told The Associated Press.

The company claims the tool is outdated and wasn’t popular. But the trending section also proved problematic in ways that would presage Facebook’s later problems with fake news, political balance and the limitations of artificial intelligence in managing the messy human world.

When Facebook launched “trending” in 2014 as a list of headlines to the side of the main news feed, it was a straightforward move to steal users from Twitter by giving them a quick look at the most popular news of the moment. It fit nicely into CEO Mark Zuckerberg’s pledge just a year earlier to make Facebook its users’ “personal newspaper.”

But that was then. “Fake news” wasn’t yet a popular term, and no foreign country had been accused of trying to influence the U.S. elections through social media, as Russia later would be. Trending news that year included the death of Robin Williams, Ebola and the World Cup.

Facebook is now testing new features, including a “breaking news” label that publishers can add to stories to distinguish them from other chatter. Facebook also wants to make local news more prominent.

“It’s very good to get rid of ‘trending,'” said Frank Pasquale, a law professor at the University of Maryland and expert on algorithms and society. He said algorithms are good for very narrow, well-defined tasks. By contrast, he said, deciding what news stories should go in “trending” requires broad thinking, quick judgments about context and decisions about whether someone is trying to game the system.

In an interview ahead of Friday’s announcement, Facebook’s head of news products, Alex Hardiman, said the company is still committed to breaking and real-time news. But instead of having Facebook’s moderators, human or otherwise, make editorial decisions, there’s been a subtle shift to let news organizations do so.

According to the Pew Research Center, 44 percent of U.S. adults get some or all of their news through Facebook.

Troubles with the trending section began to emerge in 2016, when the company was accused of bias against conservatives, based on the words of an anonymous former contractor who said Facebook downplayed conservative issues in that feature and promoted liberal causes. Zuckerberg met with prominent right-wing leaders at the company’s headquarters in an attempt at damage control. Yet two years later, Facebook still hasn’t been able to shake the notion of bias.

In late 2016, Facebook fired the human editors who worked on the trending topics and replaced them with software that was supposed to be free of political bias. Instead, the software algorithm began to pick out posts that were getting the most attention, even if the information in them was bogus. In early 2017, Facebook made another attempt to fix the trending section, this time by including only topics covered by several news publishers. The thinking was that coverage by just one outlet could be a sign that the news is fake.

The troubles underscore the difficulty of relying on computers, even artificial intelligence, to make sense of the messy human world without committing obvious, sometimes embarrassing and occasionally disastrous errors.

Ultimately, Facebook appears to conclude that trying to fix the headaches around trending wasn’t worth the meager benefit the company, users and news publishers saw in it.

“There are other ways for us to better invest our resources,” Hardiman said.

Pasquale said Facebook’s new efforts represent “very slow steps” toward an acknowledgement that the company is making editorial judgments when it decides what news should be shown to users – and that it needs to empower journalists and editors to do so.

But what needs to happen now, he added, is a broad shift in the company’s corporate culture, recognizing the expertise involved in journalistic judgment. The changes and features Facebook is putting out, he said, are being treated as “bug fixes” – addressing single problems the way engineers do.

“What they are not doing is giving an overall account of their mission on how these fixes fit together,” Pasquale said.

The “breaking news” label that Facebook is testing with 80 news publishers around the world will let outlets such as The Washington Post add a red label to indicate that a story is breaking news, highlighting it for users who want accurate information as things are happening.

“Breaking news has to look different than a recipe,” Hardiman said.

Another feature, called “Today In,” shows people breaking news in their area from local publishers, officials and organizations. It’s being tested out in 30 markets in the U.S. Hardiman says the goal is to help “elevate great local journalism.” The company is also funding news videos, created exclusively for Facebook by outside publishers it would not yet name. It plans to launch this feature in the next few months.

Facebook says the trending section wasn’t a popular feature to begin with. It was available only in five countries and accounted for less than 1.5 percent of clicks to the websites of news publishers, according to the company.

While Facebook got outsized attention for the problems the trending section had – perhaps because it seemed popular with journalists and editors – neither its existence nor its removal makes much of a difference when it comes with Facebook’s broader problems with news.

Hardiman said ending the trending section feels like letting a child go. But she said Facebook’s focus now is prioritizing trustworthy, informative news that people find useful.

|

|